Read how to maintain the balance between pursuing innovative generative AI-driven features and maintaining practical, user-friendly product design.

AI, particularly generative AI, is a hot topic captivating everyone’s imagination from LinkedIn to Friday night bars. Companies seem to be in an arms race to deploy AI features, but unfortunately, many of these rushed products fail or have to be rolled back. Moreover, models trained on copyrighted or biased data can lead to lawsuits and even business collapse. Therefore, it is crucial for companies to remain grounded in solving real user problems and delivering value.

By focusing on core user needs, like an existing pain point, improving productivity, rethinking existing experiences, or offering unique personalised interactions, AI can significantly enhance product functionality. However, it is equally important to recognise the limitations and potential pitfalls of AI, such as ethical concerns, technical challenges like hallucinations, data biases, and the high cost of implementation.

When to Use Generative AI:

- Focus on core user needs: AI is a tool, not a goal. Start by identifying real human problems and assess if AI can solve them better than existing solutions. The goal is to make users more efficient and effective. For example, meeting summarisation features in apps like Teams and Zoom save time by providing key takeaways and action items, eliminating the need to rewatch long recordings.

- Improve productivity of existing tasks: AI excels at automating repetitive tasks, freeing users for work requiring human expertise. GitHub Copilot assists with coding, saving developers time for complex problem-solving. A GitHub study found 88% of developers felt more productive and satisfied using Copilot.

- Rethink existing experiences: AI can create significant value by improving existing experiences. Perplexity AI provides direct answers to questions with citations, offering a more user-friendly search experience than traditional search engines. It summarises information with annotated links and suggests follow-up questions to refine searches.

- Personalised experiences: Generative AI can tailor content to individual preferences. Netflix famously customizes content thumbnails based on user preferences. When users browse Netflix, they are not just shown generic images but rather thumbnails that have been specifically chosen or generated based on their viewing history and preferences. This makes it easier for user to find something appealing to watch, driving higher engagement and product satisfaction.

When to Avoid Generative AI:

-

- Maslow’s Hammer: Avoid the “hammer-everything” approach (Maslow’s hammer). Just because you have a new tool (AI) doesn’t mean it’s the right solution for every problem. For instance, Air Canada’s AI chatbot misrepresented bereavement policies, leading to a lawsuit. While a customer service chatbot powered by a large language model (LLM) sounds appealing, LLMs can generate inaccurate outputs (hallucination). In this case, a traditional chatbot with guardrails would have been a better solution.

- Lack of training data: The rapid pace of AI development can lead to models being trained on inappropriate data. For example, Getty Images sued Stability AI (creators of Stable Diffusion) for producing images with Getty watermarks because the model was trained on unlicensed internet data. Similarly, biased data can lead to problematic outputs, as seen with Google’s racially diverse Nazi generation using generative AI.

- No ROI: Despite decreasing costs, generative AI computational cost is very expensive. As per Goldman Sach’s report, there is too much spend on GenerativeAI but with too little value. Companies must carefully assess the return on investment (ROI) before deploying it. Start small, experiment to quantify value. Determine if the value is durable and sticky.

- Ethical or legal concerns: Situations where outcomes can have serious ethical implications. Example using AI for policing or legal services could lead to wrong arrests due to bias in model or wrong facial detection. Likewise unreliable medical advice from AI can lead to misdiagnosis, inappropriate treatments causing harm to the patient.

In the pursuit of generative AI, the true measure of success will not be in the technology but in its meaningful impact on users’ lives. Resist the temptation to implement AI for the sake of it. Instead, focus on enhancing user experience. This involves addressing genuine user needs in ways that truly resonate with users. Simultaneously, it is important to navigate the ethical, technical, legal and economic challenges. Don’t get lost in the Generative AI hype! So next time when you think of implementing generative AI, ask yourself: What user problem are we solving and why is AI the best solution compared to non-AI solution?

READ MORE: Intelligent Messaging Accelerates 1:1 Experiences

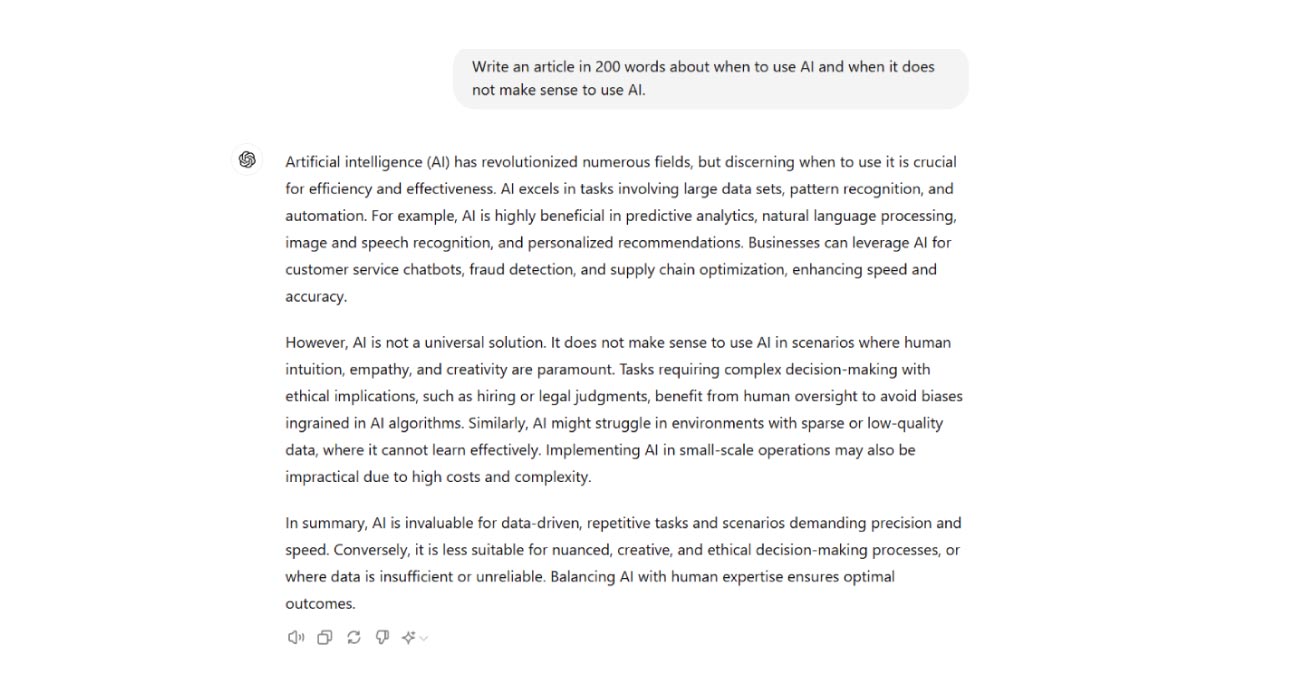

As a thought exercise, I asked this question to ChatGPT, who do you think did better?

Amplitude is a product analytics platform, enabling businesses to track visitors with the help of collaborative analytics. The platform leverages the capabilities of

Amplitude is a product analytics platform, enabling businesses to track visitors with the help of collaborative analytics. The platform leverages the capabilities of

Zoho Social, a part of Zoho’s suite of 50+ products, is a comprehensive social media management platform for businesses and agencies. The Zoho Social dashboard includes a robust set of features, such as Publishing Calendar, Bulk Scheduler, and Approval Management to offer businesses all the essential social media publishing tools. Its monitoring tools help enterprises track and respond to relevant social conversations.

Zoho Social, a part of Zoho’s suite of 50+ products, is a comprehensive social media management platform for businesses and agencies. The Zoho Social dashboard includes a robust set of features, such as Publishing Calendar, Bulk Scheduler, and Approval Management to offer businesses all the essential social media publishing tools. Its monitoring tools help enterprises track and respond to relevant social conversations.

Microsoft Dynamics 365 represents a robust cloud-based CRM solution with features such as pipeline assessment, relationship analytics, and conversational intelligence. It utilises AI-powered insights to provide actionable intelligence via predictive analytics, lead scoring, sentiment analysis, etc. Currently, Microsoft operates in 190 countries and is made up of more than 220,000 employees worldwide.

Microsoft Dynamics 365 represents a robust cloud-based CRM solution with features such as pipeline assessment, relationship analytics, and conversational intelligence. It utilises AI-powered insights to provide actionable intelligence via predictive analytics, lead scoring, sentiment analysis, etc. Currently, Microsoft operates in 190 countries and is made up of more than 220,000 employees worldwide.

HubSpot is an inbound marketing, sales, and customer service software provider, offering robust CRM and automation solutions. Some of its products include Marketing Hub, Sales Hub, Operations Hub, Content Hub, Commerce Hub, Marketing Analytics and Dashboard Software. Guided by its inbound methodology, HubSpot enables companies to prioritise innovation and customer success.

HubSpot is an inbound marketing, sales, and customer service software provider, offering robust CRM and automation solutions. Some of its products include Marketing Hub, Sales Hub, Operations Hub, Content Hub, Commerce Hub, Marketing Analytics and Dashboard Software. Guided by its inbound methodology, HubSpot enables companies to prioritise innovation and customer success.

Monday.com is a project management software company, offering a cloud-based platform that enables businesses

Monday.com is a project management software company, offering a cloud-based platform that enables businesses  Headquartered in San Mateo, California, Freshworks is a global AI-powered business software provider. Its tech stack includes a scalable and comprehensive suite for IT, customer support, sales, and marketing teams, ensuring value for immediate business impact. Its product portfolio includes Customer Service Suite, Freshdesk, Freshchat, Freshcaller, Freshsuccess, and Freshservice. Freshservice for Business Teams has helped several global organisations to enhance their operational efficiency.

Headquartered in San Mateo, California, Freshworks is a global AI-powered business software provider. Its tech stack includes a scalable and comprehensive suite for IT, customer support, sales, and marketing teams, ensuring value for immediate business impact. Its product portfolio includes Customer Service Suite, Freshdesk, Freshchat, Freshcaller, Freshsuccess, and Freshservice. Freshservice for Business Teams has helped several global organisations to enhance their operational efficiency.

Talkdesk offers an innovative AI-powered customer-centric tech stack to its global partners. The company provides generative AI integrations, delivering industry-specific solutions to its customers. Talkdesk CX Cloud and Industry Experience Clouds utilise modern machine learning and language models to enhance contact centre efficiency and client satisfaction.

Talkdesk offers an innovative AI-powered customer-centric tech stack to its global partners. The company provides generative AI integrations, delivering industry-specific solutions to its customers. Talkdesk CX Cloud and Industry Experience Clouds utilise modern machine learning and language models to enhance contact centre efficiency and client satisfaction.

The company offers comprehensive cloud-based solutions, such as Microsoft Dynamics 365, Gaming Consoles, Microsoft Advertising, Copilot, among other things, to help organisations offer enhanced CX and ROI. Its generative-AI-powered speech and voice recognition solutions,such as Cortana and Azure Speech Services empowers developers to build intelligent applications.

The company offers comprehensive cloud-based solutions, such as Microsoft Dynamics 365, Gaming Consoles, Microsoft Advertising, Copilot, among other things, to help organisations offer enhanced CX and ROI. Its generative-AI-powered speech and voice recognition solutions,such as Cortana and Azure Speech Services empowers developers to build intelligent applications. IBM is a global hybrid cloud and AI-powered

IBM is a global hybrid cloud and AI-powered  Uniphore is an enterprise-class, AI-native company that was incubated in 2008. Its enterprise-class multimodal AI and data platform unifies all elements of voice, video, text and data by leveraging Generative AI, Knowledge AI, Emotion AI and workflow automation. Some of its products include U-Self Serve, U-Assist, U-Capture, and U-Analyze. Its Q for Sale is a conversational intelligence software that guides revenue teams with AI-powered insights, offering clarity on how to effectively keep prospects engaged.

Uniphore is an enterprise-class, AI-native company that was incubated in 2008. Its enterprise-class multimodal AI and data platform unifies all elements of voice, video, text and data by leveraging Generative AI, Knowledge AI, Emotion AI and workflow automation. Some of its products include U-Self Serve, U-Assist, U-Capture, and U-Analyze. Its Q for Sale is a conversational intelligence software that guides revenue teams with AI-powered insights, offering clarity on how to effectively keep prospects engaged. Google Cloud accelerates every organisation’s ability to digitally transform its business. Its enterprise-grade solutions leverage modern technology to solve the most criticial business problems

Google Cloud accelerates every organisation’s ability to digitally transform its business. Its enterprise-grade solutions leverage modern technology to solve the most criticial business problems  8×8 offers out-of-the-box contact centre solutions, assisting all-size businesses to efficiently meet customer needs and preferences. It offers custom CRM integrations support and integrates effortlessly with third-party CRMs like Salesforce, Microsoft Dynamics, Zendesk, and more. Offering global support in all time zones & development teams in 5 continents, its patented geo-routing solution ensures consistent voice quality.

8×8 offers out-of-the-box contact centre solutions, assisting all-size businesses to efficiently meet customer needs and preferences. It offers custom CRM integrations support and integrates effortlessly with third-party CRMs like Salesforce, Microsoft Dynamics, Zendesk, and more. Offering global support in all time zones & development teams in 5 continents, its patented geo-routing solution ensures consistent voice quality. Sprinklr is a comprehensive enterprise software company for all customer-focused functions. With advanced AI, Sprinklr’s unified customer experience management (Unified-CXM) platform lets organisations offer human experiences to every customer, every time, across any modern channel.

Sprinklr is a comprehensive enterprise software company for all customer-focused functions. With advanced AI, Sprinklr’s unified customer experience management (Unified-CXM) platform lets organisations offer human experiences to every customer, every time, across any modern channel.

Upland offers a comprehensive suite of contact centre and customer service solutions with products including InGenius, Panviva, Rant & Rave, and RightAnswers. InGenius enables organisations to connect their existing phone system with CRM, further enhancing agent productivity. Panviva provides compliant and omnichannel capabilities for highly regulated industries. Whereas, Rant & Rave, and RightAnswers are its AI-powered solutions,

Upland offers a comprehensive suite of contact centre and customer service solutions with products including InGenius, Panviva, Rant & Rave, and RightAnswers. InGenius enables organisations to connect their existing phone system with CRM, further enhancing agent productivity. Panviva provides compliant and omnichannel capabilities for highly regulated industries. Whereas, Rant & Rave, and RightAnswers are its AI-powered solutions,

Hootsuite, headquartered in Vancouver, is a social media management platform that streamlines the process of managing multiple social media accounts. Some of its core offerings include social media content planning and publishing, audience engagement tools, analytics and social advertising. Its easy-to-integrate capabilities help marketing teams to schedule and publish social media posts efficiently.

Hootsuite, headquartered in Vancouver, is a social media management platform that streamlines the process of managing multiple social media accounts. Some of its core offerings include social media content planning and publishing, audience engagement tools, analytics and social advertising. Its easy-to-integrate capabilities help marketing teams to schedule and publish social media posts efficiently.

Brandwatch enables businesses to build and scale the optimal strategy for their clients with intuitive, use-case-focused tools that are easy and quick to master. Bringing together consumer intelligence and social media management, the company helps its users react to the trends that matter, collaborate on data-driven content, shield the brand from threats and manage all the social media channels at scale.

Brandwatch enables businesses to build and scale the optimal strategy for their clients with intuitive, use-case-focused tools that are easy and quick to master. Bringing together consumer intelligence and social media management, the company helps its users react to the trends that matter, collaborate on data-driven content, shield the brand from threats and manage all the social media channels at scale.

Adobe Experience Cloud offers a comprehensive set of applications, capabilities, and services specifically designed to address day-to-day requirement for personalised customer experiences at scale. Its platform helps play an essential role in managing different digital content or assets to improve customer happiness. Its easy-to-optimise content gives users appropriate marketing streams, ensuring product awareness.

Adobe Experience Cloud offers a comprehensive set of applications, capabilities, and services specifically designed to address day-to-day requirement for personalised customer experiences at scale. Its platform helps play an essential role in managing different digital content or assets to improve customer happiness. Its easy-to-optimise content gives users appropriate marketing streams, ensuring product awareness. Salesforce-owned Tableau is an AI-powered analytics and business intelligence platform, offering the breadth and depth of capabilities that serve the requirements of global enterprises in a seamless, integrated experience. Marketers can utilise generative AI models, AI-powered predictions, natural language querying, and recommendationsons.

Salesforce-owned Tableau is an AI-powered analytics and business intelligence platform, offering the breadth and depth of capabilities that serve the requirements of global enterprises in a seamless, integrated experience. Marketers can utilise generative AI models, AI-powered predictions, natural language querying, and recommendationsons. Contentsquare is a cloud-based digital experience analytics platform, helping brands track billions of digital interactions, and turn those digital

Contentsquare is a cloud-based digital experience analytics platform, helping brands track billions of digital interactions, and turn those digital

Zoho Corporation offers innovative and tailored software to help leaders grow their business. Zoho’s 55+ products aid sales and marketing, support and collaboration, finance, and recruitment requirements. Its customer analytics capabilities come with a conversational feature, Ask Zia. It enables users to ask questions and get insights in the form of reports and widgets in real-time.

Zoho Corporation offers innovative and tailored software to help leaders grow their business. Zoho’s 55+ products aid sales and marketing, support and collaboration, finance, and recruitment requirements. Its customer analytics capabilities come with a conversational feature, Ask Zia. It enables users to ask questions and get insights in the form of reports and widgets in real-time. Fullstory is a behavioural data platform, helping C-suite leaders make informed decisions by injecting digital behavioural data into its analytics stack. Its patented technology uncovers the power of quality behavioural data at scale, transforming every digital visit into actionable insights. Enterprises can increase funnel conversion and identify their highest-value customers effortlessly.

Fullstory is a behavioural data platform, helping C-suite leaders make informed decisions by injecting digital behavioural data into its analytics stack. Its patented technology uncovers the power of quality behavioural data at scale, transforming every digital visit into actionable insights. Enterprises can increase funnel conversion and identify their highest-value customers effortlessly.

Started in 2005 in a Sweden-based small town, Norrköping, Voyado offers a customer experience cloud platform that includes a customer loyalty management system. This platform helps businesses design and implement customer loyalty programs, track customer

Started in 2005 in a Sweden-based small town, Norrköping, Voyado offers a customer experience cloud platform that includes a customer loyalty management system. This platform helps businesses design and implement customer loyalty programs, track customer

TapMango provides a comprehensive, customisable, flexible and feature-rich customer loyalty program. The loyalty tools include an integrated suite of customised consumer-facing technology, easy-to-use merchant tools, and automation algorithms, all aimed at enhancing customer experience. Adaptable to any industry, TapMango’s platform helps merchants compete with larger chains, converting customer one-time purchases into profitable spending habits.

TapMango provides a comprehensive, customisable, flexible and feature-rich customer loyalty program. The loyalty tools include an integrated suite of customised consumer-facing technology, easy-to-use merchant tools, and automation algorithms, all aimed at enhancing customer experience. Adaptable to any industry, TapMango’s platform helps merchants compete with larger chains, converting customer one-time purchases into profitable spending habits.

Adobe Experience Cloud offers a comprehensive set of applications, capabilities, and services specifically designed to address day-to-day requirements for personalised customer experiences at scale. Its innovative platform has played an essential role in managing different digital content or assets, to improve customer happiness or satisfaction. Some of its products include Adobe Gen Studio, Experience Manager Sites, Real-time CDP, and Marketo Engage.

Adobe Experience Cloud offers a comprehensive set of applications, capabilities, and services specifically designed to address day-to-day requirements for personalised customer experiences at scale. Its innovative platform has played an essential role in managing different digital content or assets, to improve customer happiness or satisfaction. Some of its products include Adobe Gen Studio, Experience Manager Sites, Real-time CDP, and Marketo Engage.